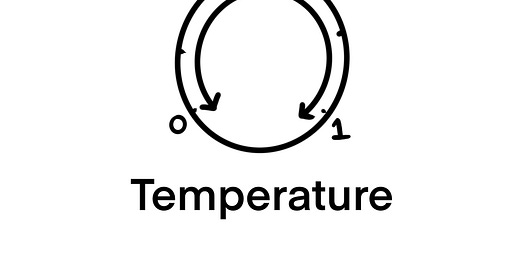

Temperature in AI domain is like a knob that adjusts an LLM’s probablity distribution on whether the output is random or predictable.

Temperature → ‘0’, the LLM’s output is more likely the expected one, and the responses are more deterministic and repetitive.

Temperature → ‘1’ then the LLM’s output is highly creative and random.

Example:

If I give a user input - “The sun rises in the…….“, then the output is ‘East’ if the temperature is set to ‘0’, or the output can be ‘Ocean‘ if the temperature is set close to ‘1’.

Applications:

Low Temperature:

Customer Support Chatbots, especially when dealing with legal Q&A (Need accurate and consistent replies)

Code Generation (e.g., SQL queries, Python scripts, etc.)

High Temperature:

Generate Marketing Materials - Blogs, Social Media Content, etc.

In tools like OpenAI’s API, you’ll often see:

openai.ChatCompletion.create(

model="gpt-4",

messages=[...],

temperature=0.7

)That line (temperature =0.7) tells the model how “creative” it should be while generating responses.

To summarize, temperature affects the probability distribution over the next word choices. A higher temperature flattens the probabilities, allowing less likely words to be picked. A lower temperature sharpens the probabilities, making the model stick to the most likely word.